Microsoft’s New AI Vasa App Makes Photos Talk and Sing

Microsoft published a research paper this week highlighting a new AI model called VASA-1 that can transform a single picture and audio clip of a person into a realistic video of them lip-syncing — with facial expressions, head movements, and all.

The AI model was trained on AI-generated images from generators like DALL·E-3, which the researchers then layered with audio clips. The results are images-turned-videos of talking faces.

The researchers built on technology from competitors such as Runway and Nvidia, but state in the paper that their method of doing things is higher-quality, more realistic, and “significantly outperforms” existing methods.

Related: Adobe’s Firefly Image Generator Was Partially Trained on AI Images From Midjourney

The researchers said the model can take in audio of any length and generate a talking face in accordance with the clip.

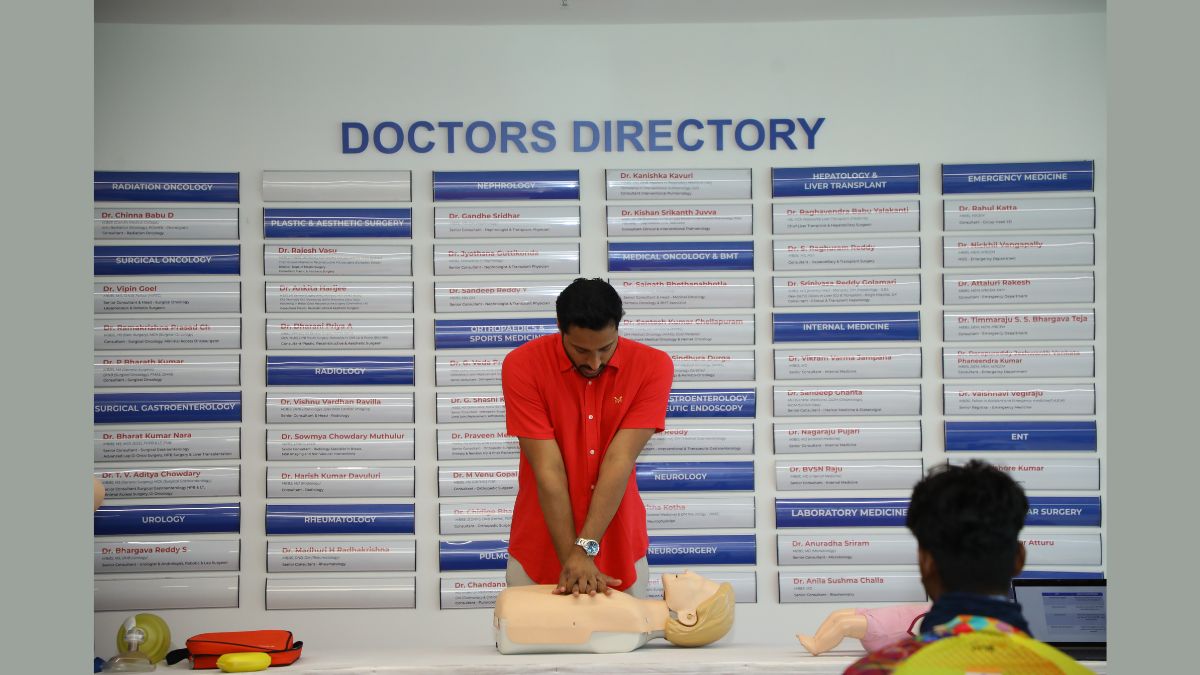

The only image that wasn’t AI-generated that the researchers experimented with was the Mona Lisa. They made the iconic image lip-sync to Anne Hathaway’s “Paparazzi,” which starts with the lines “Yo I’m a paparazzi, I don’t play no yahtzee.” A screenshot of the video mid-frame. Credit: Entrepreneur

A screenshot of the video mid-frame. Credit: Entrepreneur

The Mona Lisa was one example of a photo input that the AI model was not trained on — but could manipulate anyway. The model could also transform artistic photos, take in singing audios, and handle speech in languages that weren’t English.

The researchers emphasized that the model could work in real-time with a demo video that showed the model instantly animating images with head movements and facial expressions.

Deepfakes, or digitally altered media of a person that could spread misinformation or take someone’s likeness without permission, are a risk posed by advanced AI that can generate digital media with relatively few reference points.

Related: Tennessee Passes Law Protecting Musicians From AI Deepfakes

Microsoft addressed that concern generally in the paper, with the researchers stating, “We are opposed to any behavior to create misleading or harmful contents of real persons, and are interested in applying our technique for advancing forgery detection.”

The researchers stated that their technique had potentially positive applications too, like improving accessibility and enhancing educational efforts.

Google demoed a similar research project last month, showcasing an AI capable of taking a photo and creating a video from it that the user can then control with their voice. The AI was able to add head movements, blinks, and hand gestures.